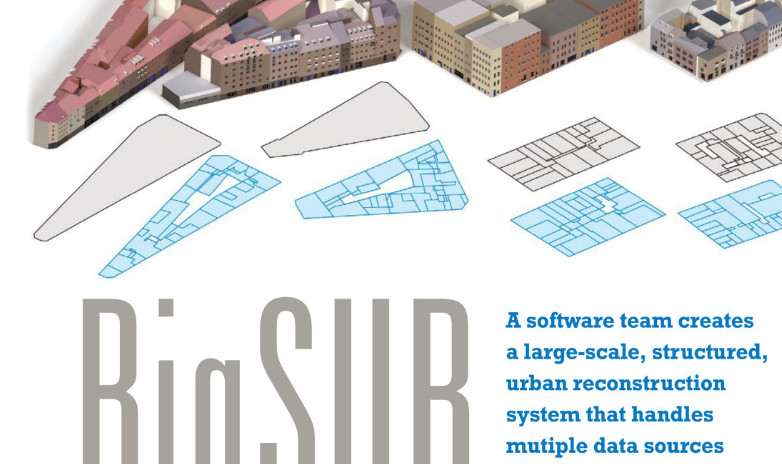

BigSUR features in the geospatial magazine xyHt this month. Read the article here.

Video has a lot of legacy components that need re-examining. Video used to be a hardware things, with tape and discs. Now it’s software; we all watch video on computers, but many of the old conventions are still with us. Even worse, the web was built for text (hTtp), and has bought it’s own conventions with it.

While trying to build a video platform, Strobee came up against many of these conventions, some of them helpful, others a hindrance. I’ve been collecting a list of them here:

But these apps take a lot of time to learn, and most people don’t have time to do that just to make a holiday video – my Gran is never going to be able to use Movie Maker, however motivated she is. So we needed simpler video editing.

In answer to this, video editing platforms have become automated, and massively simplified, such as Magisto or WeVideo. These are online applications that enable easy video upload, and sharing from your phone, tablet or desktop.

The problem with these online video editors is that they aren’t as interactive as the old desktop apps – you upload your footage to your provider in the cloud, and then wait to preview every action that you see. The waiting is caused by the expensive video splicing happening in the cloud, and causes a really ragged user experience – not what we want for Strobee at all:

With the advent of HTML 5 video, it’s possible to splice video in the browser. At Strobee we hijacked browser’s tools that were originally designed for variable quality streaming, to instead perform browser-side video splicing.

The way this works is that each bee (our short video clips) is stored as a static file on the server, and is requested by the browser. The browser uses our api to request file-names, based on the users’s preferences. The required static files are then requested from the server, and patches so they can be inserted into the video stream. This means that we can use all the usual static file caching techniques to reduce cost (Memcache, Cloudflare etc…), but give the user custom video streams:

|

| Clips delivered straight to your phone, with much less expensive work done in cloud |

It’s these custom streams that allows Strobee to give users a different video every-time they come to the site, even every-time they perform an action (fast-forward, repeat a bee, change the length of a bee, preview a user’s stories). Even better it means that the video never has to end – we can keep adding bees as fast as the user watches them, even recycling already downloaded clips to save bandwidth.

Strangely, the most complicated part has been creating a simple UI that people understand – letting people edit video while they think they are just navigating a friend-list.

One final feature that we’re proud of, is that the user can even download their video. This uses just the same process – the composition happens in the user’s browser, splicing the video in memory. We look forward to finding out how this scales – both on the server side (when a user requests all their bees as a single video file), and client side (when browser tries to build the user’s video in memory).

and because people don’t look like their photos irl…here is a distribution over to sample:

For the past few months I’ve been working on a re-imagining of video-sharing. But I’ll set someone smarter than me summarize it:

You really have to play with Srrobee to understand how cool it really is. pic.twitter.com/rr4sufrqXC

— jon bradford 🚌🚚✈️🚅⛴ (@jd) February 16, 2016

This has been a long road, starting with a GCSE (a high school qualification) in Media Studies many years ago. I spent a very happy few days with a bunch of friends creating a trailer for “Starship Troopers”. We discovered that by far the most exciting approach (for 15 year olds without any video editing experience), was to find all the short clips of people shooting guns (it was quite a violent film), and put them together. We could get away with this in-class as we’d just been taught about a technique called “montage”. We got a good grade for the project, but moved onto other things. (Apologies for video/sound quality, it was recovered from VHS tapes).

More recently, and due to a lot of travelling and cycling, I bought an early action-camera. Compared to a SLR camera or smartphone, these are great because they’re robust and easy to use. After a trip to Thailand I was left with loads (32Gb) of footage that was mostly wobbly, with noisy audio, and generally a bit shit, so I tried editing it down into something people would want to watch (here’s an old blog post). I tried a few attempts at “professional” editing by carefully constructing a narrative, but I came to the conclusion that there wasn’t enough footage to build one. Plus, there was no chance to go back and shoot more. In the end I found that taking many short clips was a very robust and fast way of manually editing a movie from bad footage.

The greatest observation that came out of this process was that selecting the short clip to use wasn’t very hard. As long as there was sufficient variety, and the clips kept moving, people wanted to watch more. Much more than if I tried to show them a wobbly 40 minute clip of me trying to feed peanuts to an elephant. What I thought was interesting video, wasn’t interesting at all to other people – they just wanted to see all the different things I did on my holiday.

When I found myself with some free time, I decided to automate the process – so Strobee was started as a bit of a startup. This started off as a desktop software project, but it became clear that the project belonged on the web.

I went to Thailand, and being a geek, took 3 cameras and came back with way too much mediocre footage, ~30Gb, or 3 hours or so. Trying to edit it all to anything my friends would actually want to watch (or, fantastically, recommend someone else watch), would have taken a long time, and was probably beyond my skill level and hardware. My solution was to pick 0.5 second long section clips from each video. I was really quite pleased with the result:

This is actually a quite interesting 4 minute video, as far as holiday videos go. Possibly about 3 minutes too long, but pretty succinct.

The thing that really struck me was that the process of selecting the clip from the (sometimes quite long) clip was almost trivial, to the point where an algorithm could make pretty good guesses.

So now we imagine a world in which everyone is uploading bees, from their phones, shiny new pairs of Google glasses (or do you wear a google glass?), cameras, etc… We pretty quickly come to the conclusion that strobees can, and should, be assembled on the fly from a large database. That is we could steam a strobee (endless video stream) to user based on:

Early animations from my PhD. How a computer scientist might program building geometry. CGA / Split shape grammar, physically engineered, designed, and grown (which was the topic of my PhD…).