Demo mode is up and running on twak.org. Originally designed for the vcg website, the leeds-wp-projects makes it easy for users to explore your content via the pages’ featured images in-line, or in a fullscreen demo mode (and the high-octane extreme demo).

Author: twak

videos of open-world games

Sometimes it nice to have videos showing people just how big open world games have gotten.

glitches in the matrix

The great thing about graphics research is that my bugs are prettier than your bugs…

thesis latex source

Build instructions:

- The thesis/mk.sh script will try to build dissertation.pdf

- Latexmk is used to find resources to rebuild

- Most of the images were created using inkscape, and would be best edited using the same.

- I used svg-latex for images. The setup will reprocess any svgs, using a command line call to inkscape. This means that latex has to be configured to run scripts (enable write18 is the magic google search).

- I’m sorry but I don’t have a record of all the latex packages required for the build. Those that aren’t in source are available in Ubuntu package manager.

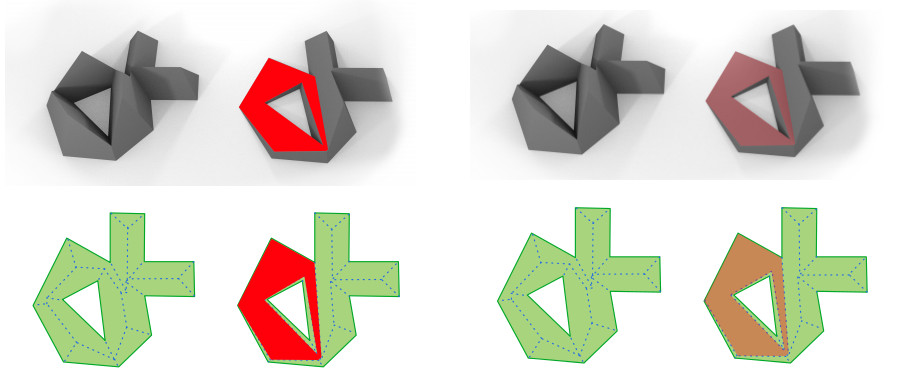

There are some strange transparency rendering bugs in some images in the Chrome pdf viewer (below left). Adobe and Ubuntu Document Viewer (below right) handle them fine.

building better video

Video has a lot of legacy components that need re-examining. Video used to be a hardware things, with tape and discs. Now it’s software; we all watch video on computers, but many of the old conventions are still with us. Even worse, the web was built for text (hTtp), and has bought it’s own conventions with it.

While trying to build a video platform, Strobee came up against many of these conventions, some of them helpful, others a hindrance. I’ve been collecting a list of them here:

- Why is every video the same every time, when our Facebook page is different every time we return to it? One of Strobee’s design challenges has been allowing this, as well as allowing visitors to revisit and link to videos they’ve seen before.

- Why do we always have to scroll down to see more video on Instagram or Vine? We are used to our web-browsers working as electronic-book-reading-machines. To accommodate this, the current web-video idiom is magical moving pictures on each page of the video book. Video is video, and should be fullscreen. The interface should be built around this ideal.

- Square videos are a fad, the world is starting to get over them. Our monitors, cinema, phones, and cameras seems to have settled on 16:9.

- Why does every video player need a play and pause button? They are legacy interfaces left over from hardware devices with actual buttons. There is no cost or side effect of not pausing a video. GIFs are a great example showing that we don’t need these controls any more.

- Long form video is a different media than social video. It has different conventions, constraints, and ways of viewing. My parents make appointments with TV – they’ve got to get home on time to watch 60 minutes of Inspector Morse at 9pm. To justify this kind of effort, video has to be 10s of minutes long, and once you’ve gone to all that effort, and watched something for 10 minutes, you’re trapped into to staying and watch the other 50 minutes.

- Strobee attempts to bridge the gap between long form and short form (micro-video) video – our video can be viewed in small sections, but also keeps playing endlessly, letting people who want to watch for 10s of minutes.

- Video navigation is a hard problem. It was very simple with cable TV: just pick a channel. Today we have many more variables possible (user, video clips, position in those clips, order of those clips, etc…). We experimented a bit, and just now Strobee has a fine-grain history (the clip gallery) along the bottom of the screen, and a course-grain explorer (the story navigator) up the right hand side of the screen.

- We should share video effortlessly and without thinking – in the same way we Instagram or tweet. We shouldn’t be worried that the video isn’t interesting to others or that the quality is lower than what we see on TV. We want to share our experiences using video with the click of a single button. Strobee is built to encourage this casual sharing. Clips are short, but what they lack in quality, they make up for that by both variety, and the interface’s ability to skip and explore.

- As with photos, some video clips are one-offs, but sometimes sequences of them tell a bigger story. Albums of photos can be more interesting than individual clips. When you look a photo album, you have to do the mental work to reconstruct the event – there’s no reason this shouldn’t work in video; there’s no reason, given the right format, why this shouldn’t be the case for video. Generating a perfect narrative (as we see in cinema), requires exact planning to capture every event – a totally different philosophy to the photo album.

- Unwatched video is a massive opportunity! Today, some of us have lots of video, in the future all of us will have lots of video. People with action-cameras (GoPros), are notorious for recording footage they never watch or share. They return from holiday with full memory cards, few have the patience to watch it all, fewer share any, and fewer still take the time to create a video that others would actually want to watch. It should be easy to share bulk footage like this, Strobee makes it a little easier, but there’s a long way to go…

Solve all Java errors

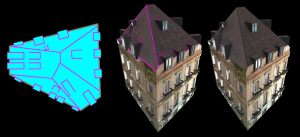

frankenGAN pretties

scary monsters and nice robots

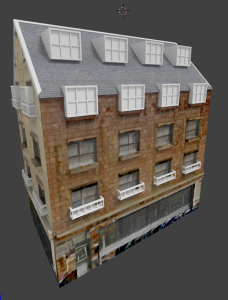

the bits that didn’t make the FrankenGAN paper: using GANs in practice (now we’re conditionally accepted to siga this year):

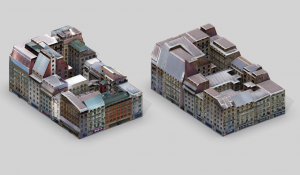

…into detailed geometry and textures:

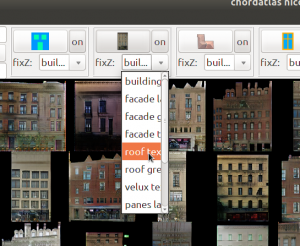

Every facade, roof and window has a unique texture (rgb, normal and specular maps) and layout. You can control the style generated by giving the system style-examples for each building part (roofs, facades…)

FrankenGAN uses a bunch GANs to greeble buildings. One GAN goes from blank facade to window locations, another from window locations to facade textures, another from facade textures to detailed facade labels (window sills, doors…), another from window-shapes to window labels etc…

A cynic might suggest was that all we did was solve every problem we encountered with another conditional GAN. An optimist might say that it shows how we might build a CityEngine that is entirely data-driven.

Let’s continue to look at some of the caveats to training so many GANs in the real world…

robots! Our training was plagued by “localised mode collapse” (aka Robots). In the following we see that the network hallucinates the same detail block again and again, often in the same location.

- Selecting the final training epoch. It seemed that it was never possible to completely get rid of robots, but it is possible to select which ones you get. By carefully selecting the training epoch to you, we found those with acceptable mode collapse. There were always some collapses.

- Dropping entries from the datasets. Our red-eyed robot seemed to be caused by red tail lights on cars at street level. Because we had relatively small datasets, it was possible to manually remove the worse offenders. Removing 1-5 entries seemed to make a difference sometimes.

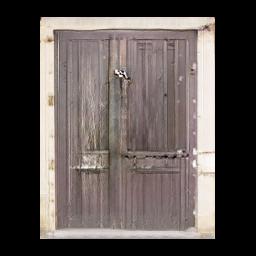

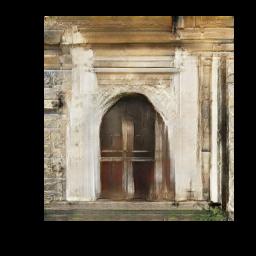

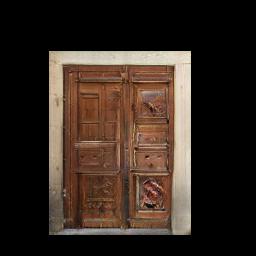

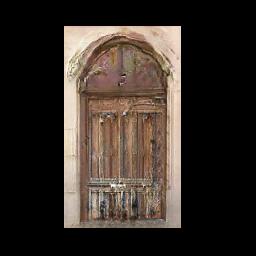

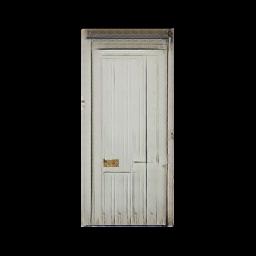

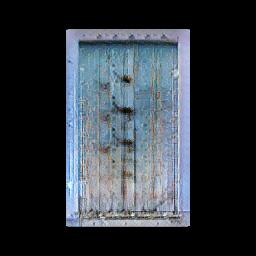

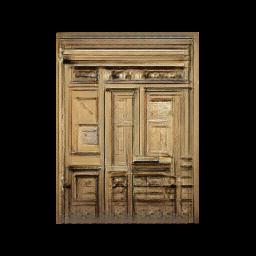

and output are our door pictures. So our training data was ~2000 pairs of red rectangles, and corresponding door textures.

Gathering this data is the major artistic process here. Imagine teaching child to draw a door – which examples would you show them? You want them to learn what a door is, but also want to understand the variety that you might see collection of doors. GANs tend to lock onto the major modes, and ignore the more eccentric examples. So if you want to see these there had better be a bunch of examples illustranting them. The bulk of the door dataset I put together is regular doors, with a few extra clusters around modern and highly ornate doors to keep thing interesting.

After 400 epochs training (200 + 200 at a decreasing learning rate, 10 hours of training) the results here. We had some nice examples:

So the solution was to edit the dataset…to remove some wooden doors, and examples with arches and too much wall. In this case we’d shown our child too many strange examples, and they kept drawing them for us. Even though the dataset fell from 2000 examples to 1300 examples the results were generally better, for being more focused. Every second it’s possible to manually view and possibly delete multiple images, so this can be done in less thank an hour (although you get strange looks in the office, sitting in front of a monitor strobing door images, furiously hammering the delete key). Results here. Each training run was an overnight job, taking around 9 hours on my 1070.

These are looking good, given that we don’t have labels (like the FrankenGAN windows and facades). But there were still some problems.

At 200 epochs the learning rate starts decreasing, however at this point the results weren’t good. So we’ll also bump the training to 300 epochs at constant learning rate, before decreasing for a further 300.

Being a GAN there are still some bad samples in there, but generally we have pushed the results in the direction that we need them to move.

As we see creating the dataset and training parameters is an involved iterative process that takes knowledge, artistic license, and technical ability to solve. I don’t pretend to have any of these things, but I can’t wait to see what real texture artists do with the technology.

So there are a many of artistic decisions to made when training GANs, but in a very different way to current texturing pipelines. Game artists are particularly well positioned to make use of GANs and deep-texturing approaches because they already have the big expensive GPUs that are used to train our networks. I typically use mine to develop code in the day, and to train at night.

Maybe future textures artists might be armed with hiking boots, light probes, and cameras rather than Wacoms and Substance; at least they will have something to do while their nets train.

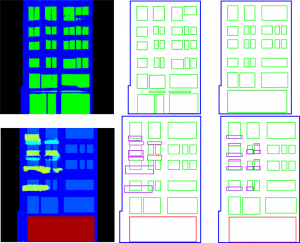

regularization (this did end up in the final paper thanks to the reviewers). Because we applied GANs to known domains (facades, windows, roofs…) we had a good idea of what should be created, and where. This allows us to tidy up (regularize) the outputs using domain priors (we know windows should be rectangular, so we force them to be rectangular). So we can alternate GANs which create structure (labels) and style (textures), which allows basic regularization to happen in between. Because the regulators are out-of-network, they don’t have to be differentiable…and could even be a human-in-the-loop. This gives a very reliable way to mold the chaotic nature of a GAN to our domain.

In addition, because the domain of the GANs used is tightly controlled (we do the facade, then the roofs, then the windows), it acts a inter-GAN form of regularisation. We only get facades on the front of buildings etc… Obvious, but very powerful when we have good models for your domain.

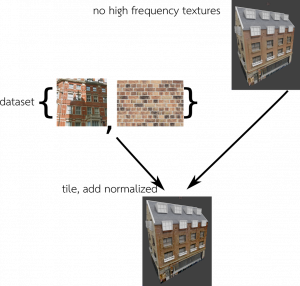

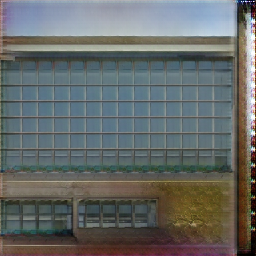

super super networks. Our super-resolution network was a little bit last minute and hacked together. It took the low resolution GAN outputs (256×256 pixels) and ran a super-resolution GAN to create an output of around 80 pixels per (real-world) meter. Unlike most existing super-resolution networks, we wanted the results to be inventive and to include a stylistic element (so some blurry low res orange walls become brick while on another building it could become stucco). The architecture had the advantage that it used a very similar pipeline to the other networks in the paper, but we had earlier plans to do something different.

The super-resolution style results presented in FrankenGAN were never very strong. The underlying colour tended to overwhelm the style vector to determine the results. This was a common theme when training the networks – the facade texture network had a tendency to select colour based on window distribution. For example missing windows in a column were frequent in NY (they were occluded by fire escapes), so the texture network loved to paint these buildings brick-red.

However, we generally overcame this problem with BicycleGAN to make the nets do something useful; we can clearly see some different styles given the same low-resolution inputs:

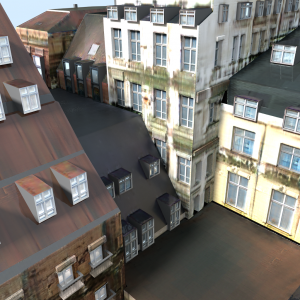

We see that variety in the types of network and domain (windows, facades, roofs) come together to create a very strong effect. This “variety synergy” seems to be enough to stop people looking too closely at any one texture result. We were somewhat surprised to get results looking this good just a week before the deadline (and then had to write a paper very quickly about them…).

A nice side-result was using super-resolution to clean up images. Here we see an early super-resolution result (fake_B) removing the horizontal seams from Google Streetview images (real_B) by processing a heavily blurred image (real_A):

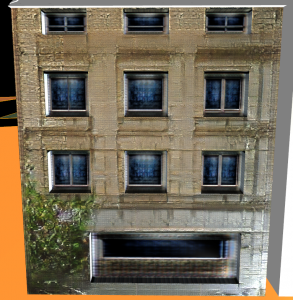

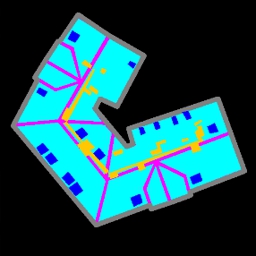

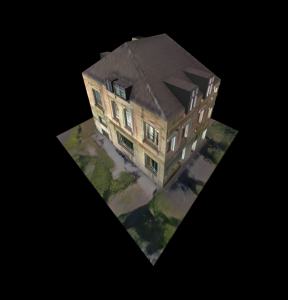

building skirts. A fun early result was generating the area around buildings (aka skirts). Here the labels (top left) were transformed into the other textures:

What is nice here is the variety in the landscape, and how it adapts to the lighting. For example, on an overcast day the surrounding area (bottom left) looks much less green than a similar texture in the “day”. More results. The final roof results in FrankenGAN lost all this “skirt” texture because of some of the network conditioning we applied.

tbh they don’t look great in 3D, they have no idea how to synchronise with the building geometry, so there are problems such as the footpaths not leading to doors.

Even though the patches were randomly located, the results ended up with many repeating textures at the frequency of our patches.

While these results have “artistic” merit, there weren’t really what we were looking for. However, we note that that the window reconstruction, and repeating pattern (when close to our patch size) was very strong. Perhaps multi-scale patches might help in a future project [edit: CUT did something similar to this is 2020]…

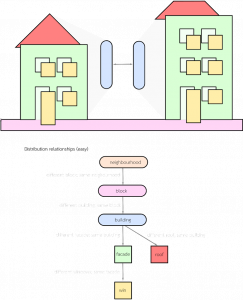

- a probabilistic bake-with: so that there’s a 10% the window style is baked at the block level, 30% chance at the building, and 30% at the facade level.

- geometrically driven bake-with: so facades which face the street might have a different style than those who face their neighbours.

where next? I had wanted to use GANs to create “stylistic” specular and normal maps, but never got around to it. In the end I hacked together something very quickly. This took the RGB textures, assumed depth-from-greyscale value, and computed the normal and specular maps from these. Use the layers menu in sketchfab to explore the different maps (yes yes, I know the roof is too shiny):

openGL pipeline

loading the street view trike

Around the corner we can see reflections of the same bespectacled guy riding the trike around Soho…